Recent searches

Search options

Has anybody else noticed & pointed out that one of Bing's new #ChatGPT demos with citations is lying as to what those sources say?

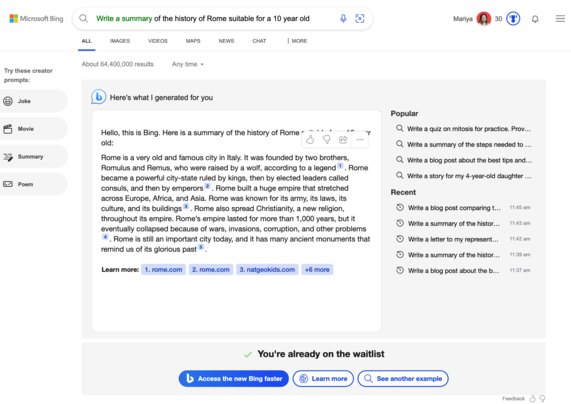

This is the sample prompt we get for "summary" - about Roman history for a 10 year old.

It links to sources for specific claims.

Yet when I look up many of these claims on those pages... they aren't there.

@mariyadelano Always check the source….. mistakes, omissions and plagiarism are just as likely to be found by AIs like ChatGPT or BARD….

That's interesting - we know that ChatGPT tends to compose "Schrödinger Facts" (https://sentientsyllabus.substack.com/p/chatgpts-achilles-heel), but I would have thought that it should be pretty straightforward to fix that by intersecting its statements with sources. As you noticed, getting that right seems to take more than just putting keywords into a Bing search.

Thanks!

@boris_steipe admittedly, my writing students back in the day sucked at figuring out what sources said too. And they were very smart college students.

@mariyadelano thanks for sharing, not surprising from what I’ve seen of XAI. We can’t make a haystack and expect not only to find the needle but also where a single piece of hay came from. (Yay metaphors.)

@paulmwatson I actually have not heard the term XAI in years, thank you for reminding me of it.

And yep - the tasks that we are expecting ChatGPT to perform are a lot more complex than what that technology is meant to be doing / was designed for.

I think the hype is getting away from the people working on these models at this point.